How to Avoid Cache Stampede or "Dogpile" Problem Upon Cache Expiry?

Understanding How to Avoid Sudden Traffic Burst When Cache Becomes Invalid

Cache seems to be the easiest solution for improving the latency of a system, which is required to return the same response to multiple clients for a given request. However, most of the time, the setup to re-populate the cache upon expiry misses a crucial scenario that, if it occurs, can bring down the entire system—a thundering herd problem combined with cache expiry.

What is the Cache Stampede Problem?

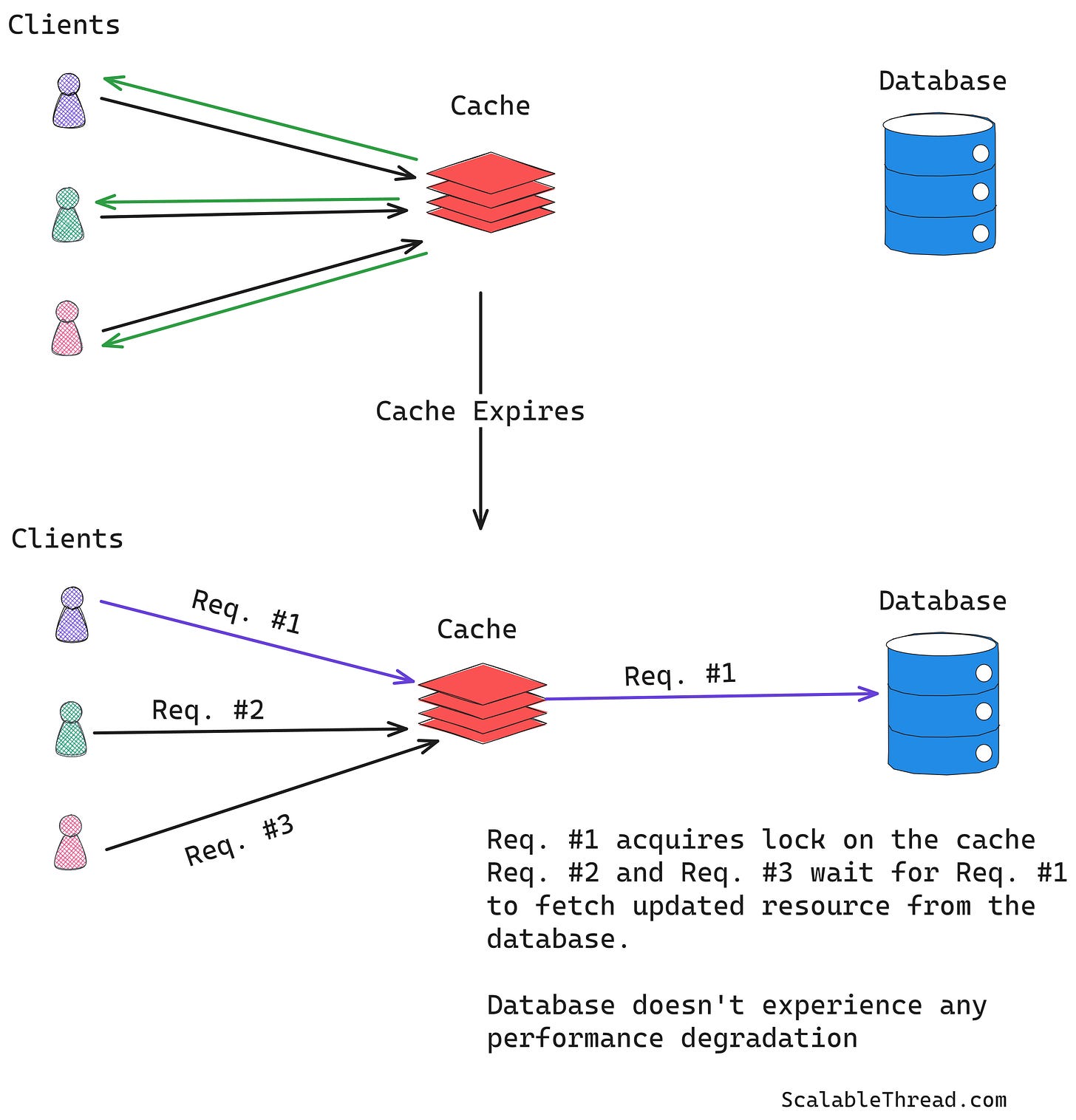

Imagine a scenario where your system is serving database reads through a cache. Upon every cache expiry, a request is sent to the database to re-populate the cache with an updated value. Now, during peak traffic times, a cache expiry happens. The result? All the client requests are directed to the database simultaneously to fetch the new value (or fetch while the backend resource is re-computing the new value for the first request after the cache miss), resulting in overwhelming the database or backend resources, eventually leading to performance degradation of the service. This situation is often termed as a Cache Stampede or a Dogpile Problem caused by cache expiry.

How to Handle Cache Stampede Problem?

Proactive Cache Population

This approach includes proactively re-populating the cache through an external process. Depending on the system's requirements, the external process can update the cache upon the expiry of the cached value, close to the expiry of the cached value, or at certain time intervals. The backend or database system can also be set up to dispatch events upon every state update, and the external process can subscribe to these events and update the cached data in the background.

Request Collapsing using Cache Locks

This approach requires setting up locks such that the first process requesting the cached resource close to expiry or after expiry acquires a lock and reaches the backend resource to request updated data. The lock is released once the updated data is fetched and the cache is updated with new data. While the lock is held, all other processes wait for the cache to update or return the expired value to the clients. Any retries in this scenario to check if the lock has been released can be done using exponential jitter and retry to avoid overwhelming the caching service.

Probabilistic Early Cache Expiration

This idea is similar to exponential jitter and retry, where the goal is to prevent all processes from simultaneously requesting a backend resource. In this approach, each process fetches the updated cache value by an independent probabilistic decision before the cache expires. The probability of re-populating the cache increases closer to the cache expiration. The independent probabilistic cache update allows each process to request the backend for updated value at different times, reducing the cache stampede effect as only some processes try to re-populate the cache simultaneously.

References

Wikipedia contributors. (2024a, March 5). Cache stampede. Wikipedia. https://en.wikipedia.org/wiki/Cache_stampede