How to Handle Backlogs in Queues?

Understanding the Approaches to Deal with Backlogs in Asynchronous Systems

Queues are the first choice when connecting a client (producer) and a server (consumer) in an asynchronous system. They can be useful in scenarios such as:

When a client (producer) doesn't need an immediate response from the server (consumer), i.e., fire-and-forget scenarios.

When a client (producer) sends a spike of requests that the server (consumer) can't handle synchronously, they are handled asynchronously instead of getting dropped.

When a client (producer) can't wait or experiences timeouts while the server (consumer) processes requests, queues allow the producer to continue to produce messages while the consumer processes at its own speed.

Although asynchronous systems are not meant to be extremely latency-sensitive compared to synchronous systems, they are still required to satisfy some service level objectives (SLOs) regarding latency for each request.

The Problem

Queue-based systems are great as long as the queues are not backlogged. The entire system backfires when the consumers stop processing messages from the queue due to failure or producers start flooding the queues and consumers can't keep up. When the system returns to normal, the queue backlog drastically increases the system's latency as the consumers must process the backlog before they can start processing the new messages. This backlog situation can also lead to the expiry of the data in the queue if the time to process exceeds a specific limit, after which the results from that data are no longer useful.

Strategies to Handle Queue Backlogs

Separate Queues for each Workload

In a system where a single queue is used across several services, a noisy neighbor can backlog the queue, thus impacting the other services depending on the same queue. Having a separate queue for each workload can make the system more resilient to such situations. However, this might not be the most cost-effective solution. Also, this approach might not scale well in a system with a high number of workloads.

Separate Queue for Backlog

In this approach, a separate queue can be created to handle the backlog. There can be two scenarios:

Separate the excess traffic at a Workload/Producer/Customer level

In this scenario, the producer or consumer throttles the traffic from a particular workload or producer or customer based on the rate limit assigned, and instead of processing it, they push it to a separate spillover queue. The messages sent to the spillover queue can be deleted from the primary queue. The messages in the spillover queue can be processed once the backlog in the primary queue has been reduced and the system resources have become available.

Separate the old traffic

In a scenario where working on fresh messages is more important than processing the old messages in the backlog, consumers can dequeue a message from the queue, check its age, and push it to a separate backlog queue if the age of the message is more than a set threshold. This approach will allow the consumer to work on the fresh messages first and take care of the old traffic once the system resources have become available.

Dropping Expired Messages

In some systems, results from the data older than a certain threshold are no longer useful. To quickly parse through the backlog, these systems can avoid processing expired messages and instead drop them.

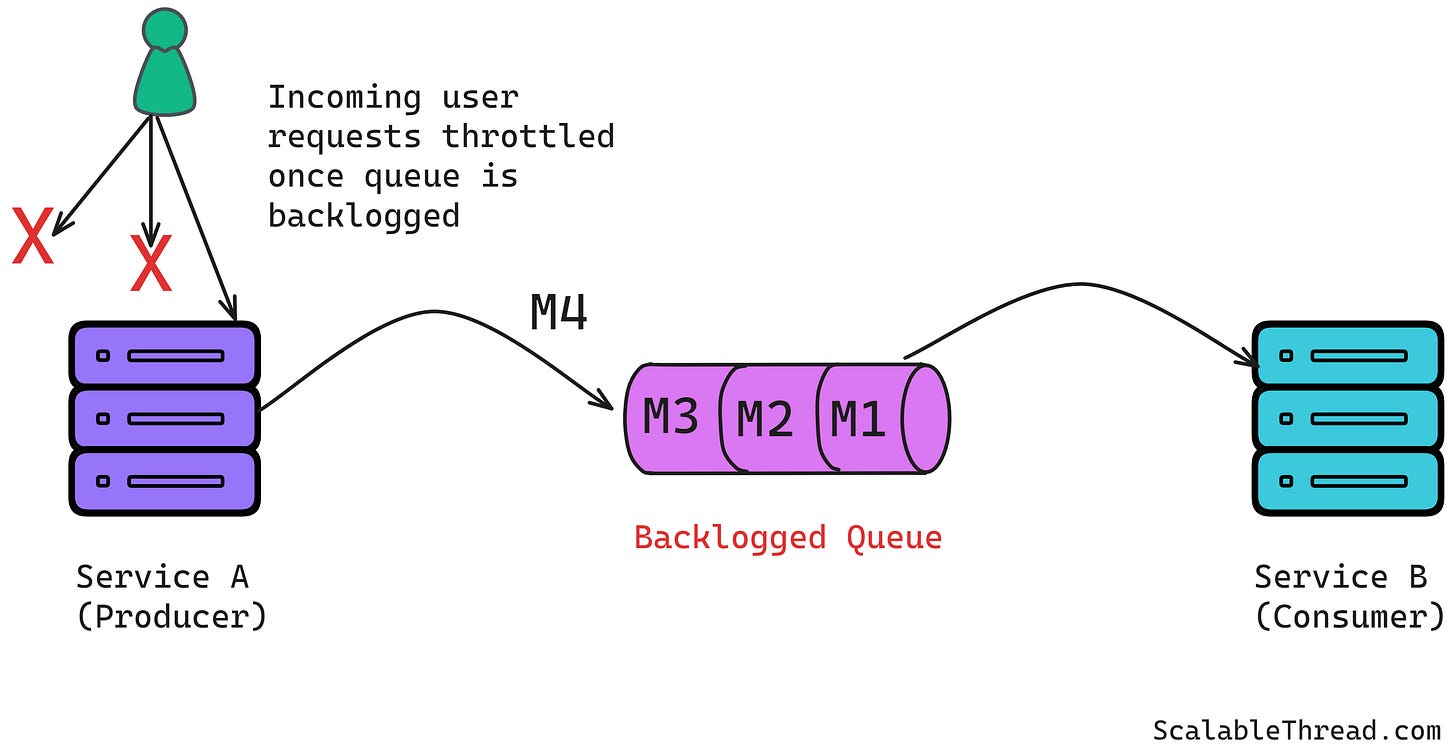

Sending Backpressure Upstream

Asynchronous systems are prone to the Producer-Consumer problem, which can be solved by applying backpressure. In a system where rejecting user/producer requests is acceptable, traffic sent to the queue can be controlled upstream to prevent the backlog situation from getting worse. However, in systems where it's not possible to reject user requests (such as orders in e-commerce, etc.), this approach might not be helpful.

Using Dead Letter Queues

Suppose the queue policy is set to retry a message or group of messages continuously throwing errors a certain number of times before dropping them. In that case, it can lead to an increased processing latency if several messages in the backlog end up on the error path due to an invalid message or some issue on the consumer side. This can adversely impact the processing of the backlog. Instead of retrying for a longer duration, a Dead Letter Queue (DLQ) can be used to hold the messages that throw exceptions. These messages can be retried automatically or manually.

References

Avoiding insurmountable queue backlogs. (n.d.). Amazon Web Services, Inc. https://aws.amazon.com/builders-library/avoiding-insurmountable-queue-backlogs/