How to Invalidate and Evict Data from Cache?

Understanding Different Cache Invalidation and Eviction Strategies

What is Cache Eviction?

Caching frequently accessed data is a popular technique for improving system performance, as cache storage provides low latency for reads and writes. However, cache systems often have limited capacity to store data. The application must remove unneeded items from the cache to meet performance goals or replace them with new ones at intervals. This process of selecting which data item to remove or replace in a cache is called Cache Eviction.

What is Cache Invalidation?

Cache usually holds a copy of data kept in persistent storage, which, if accessed directly, will incur a higher latency. This copy of data must be updated if the underlying source of truth in persistent storage changes. Not doing so makes the data in the cache and persistent storage inconsistent, which can pose a risk of reading and using outdated data from the cache, resulting in undesired outcomes. This process of updating invalid or stale data in the cache when the data in the primary data source is updated is known as Cache Invalidation.

Cache Eviction Strategies

Here are some of the cache eviction strategies:

Least Recently Used (LRU)

LRU algorithm removes the item that hasn’t been accessed for the longest period (a.k.a least recently used). For example, on a news website, a new or breaking news article will have a higher reader demand than some old news article. In this scenario, the oldest news article can be evicted from the cache and replaced with the latest article.

Least Frequently Used (LFU)

LFU keeps track of how often a particular data item is accessed. When the need to remove an item arises, it removes the item that has been accessed the least number of times, regardless of when it was last accessed. For example, the least frequently used query is invalidated first in a database query cache

First In First Out (FIFO)

As the name suggests, the entry in the cache for the most extended period is evicted first, regardless of how frequently or recently it has been accessed. For example, the old telemetry logs are truncated from the first, and new ones appear at the end.

Cache Invalidation Strategies

Here are some of the cache invalidation strategies:

Write-Through Cache

A Write-Through Cache propagates data updates to the cache and the underlying datastore simultaneously, ensuring consistency between the cache and the backing datastore. As a write-through cache, the backing datastore is updated first, and then the cache is updated before returning the response to the client. If the updates to the database fail, the cache is not updated, and the error response is returned to the client. The double writes to both the cache and the datastore before returning the response, which results in higher turnaround latency for a client update request.

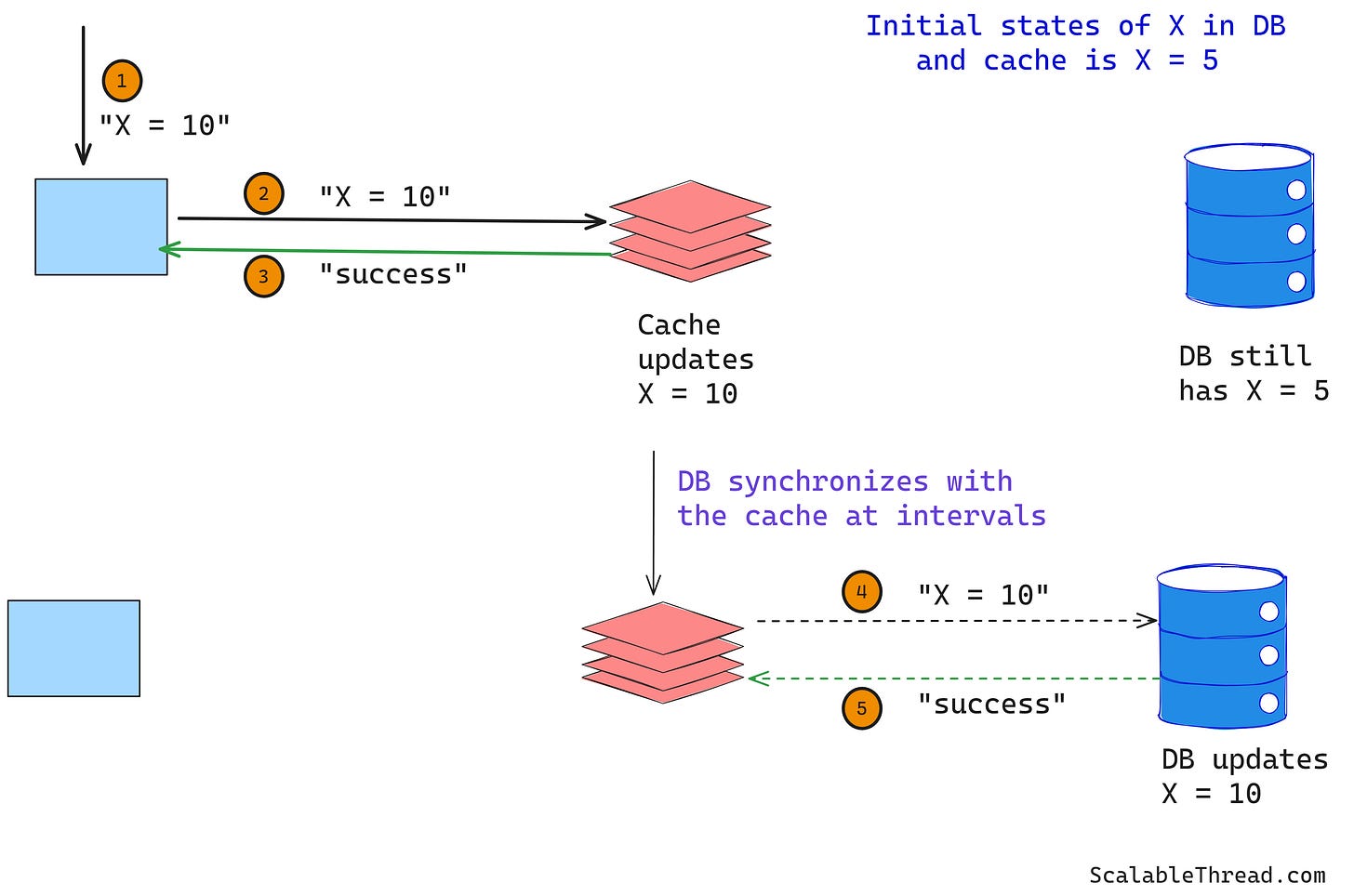

Write-Behind Cache

In this approach, all the latest data updates are pushed to the cache, and the primary storage is eventually updated at intervals behind the scenes. Compared to a write-through cache, this provides lower turnaround latency for client requests, which makes it quite effective when there is a high influx of write-heavy requests. However, in the event of a cache failure or crash, data loss is possible if the updates have not been propagated to the backing datastore yet.

Write-Around and Cache-Aside

This is a lazy loading approach in which the data updates are directly pushed to the primary datastore, skipping the cache, and the read requests continue to be served from the cache. The data in the cache is updated upon a cache miss. Upon a cache miss, the service reads the updated data from the primary storage and populates the cache before returning the response to the client. The cached data is returned to the client in case of a cache hit. This scheme prevents flooding of the cache with updates that will not be read but has a high turnaround latency for read requests for recently updated data in case of cache miss.