How to Optimize Performance with Cache Warming?

Optimizing Performance and User Experience in Large-Scale Distributed Systems

What is Cache Warming?

When a request arrives for data that isn't in the cache (cache miss or cold cache), the system must retrieve it from the primary database or service. This process introduces latency, as fetching data from disk-based databases is significantly slower than from an in-memory cache.

Cache warming avoids this performance penalty by ensuring that high-demand data is already in the cache when the first client request arrives. It preloads frequently accessed data into a cache. This is especially important during system restarts, deployments, or traffic spikes.

Cache Warming Strategies

Several strategies can be employed to warm a cache.

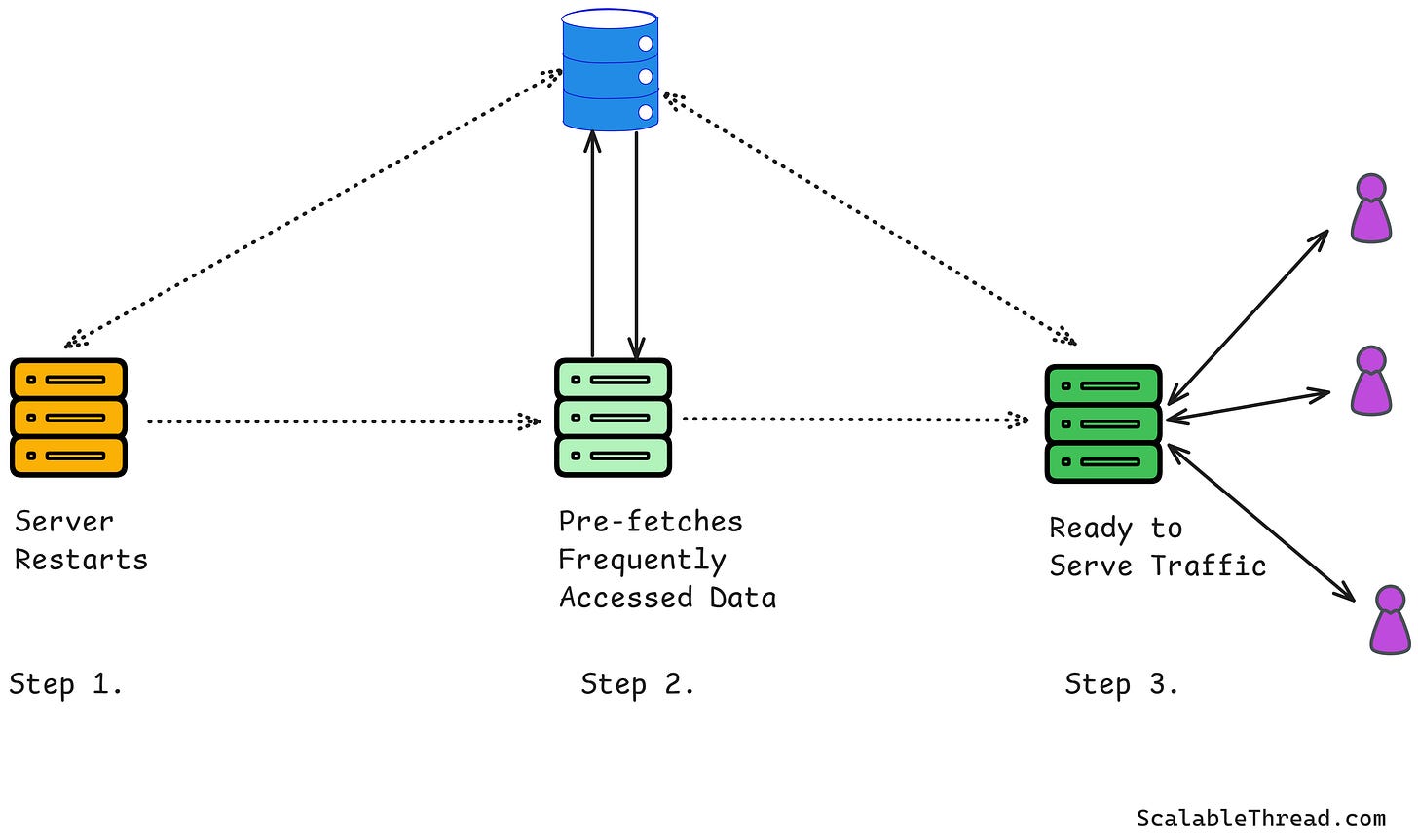

Pre-warming on Deployment

Immediately after a new service version is deployed, its in-memory cache is typically empty. A script or process is initiated to populate the cache. The script identifies the most critical or frequently accessed data and loads it. Preloading the cache with frequently accessed data prevents an initial flood of cache misses and a subsequent thundering herd problem.

For example, in a global e-commerce platform, product catalog data is accessed millions of times per hour. Suppose a new application instance starts without a warm cache. In that case, the first thousand requests might hit the database directly, increasing response time and database load. The system avoids this bottleneck by warming the cache with top-selling product IDs before the instance accepts traffic.

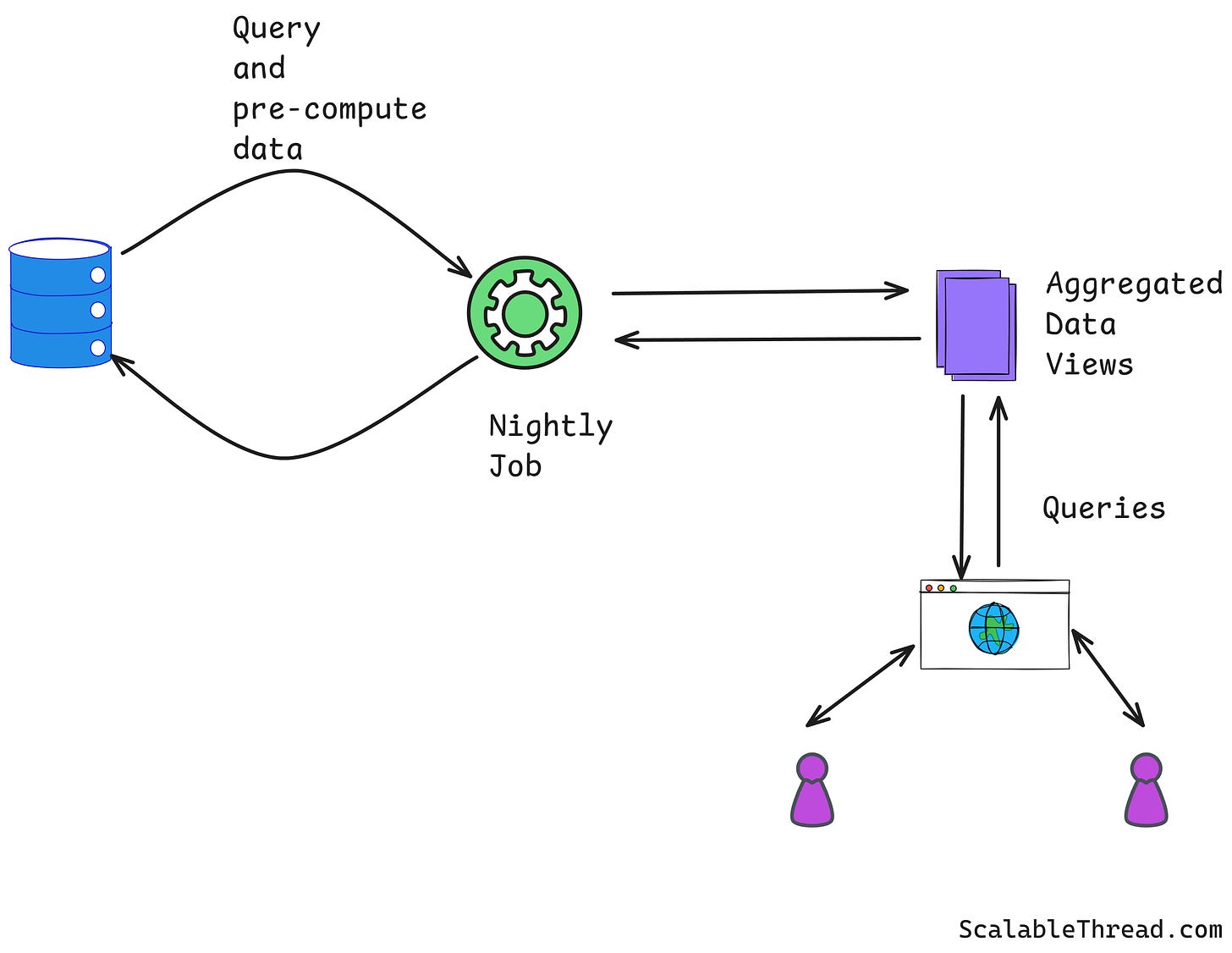

Scheduled Warming

Scheduled warming involves running a batch job regularly (e.g., hourly or daily) to refresh and populate the cache. This is useful for data that doesn't need to be real-time but is computationally expensive.

A data analytics platform might run a nightly job to pre-compute and cache complex analytical queries for its customers. When users request their daily report, the pre-computed result is served directly from the cache, avoiding a resource-intensive database query.

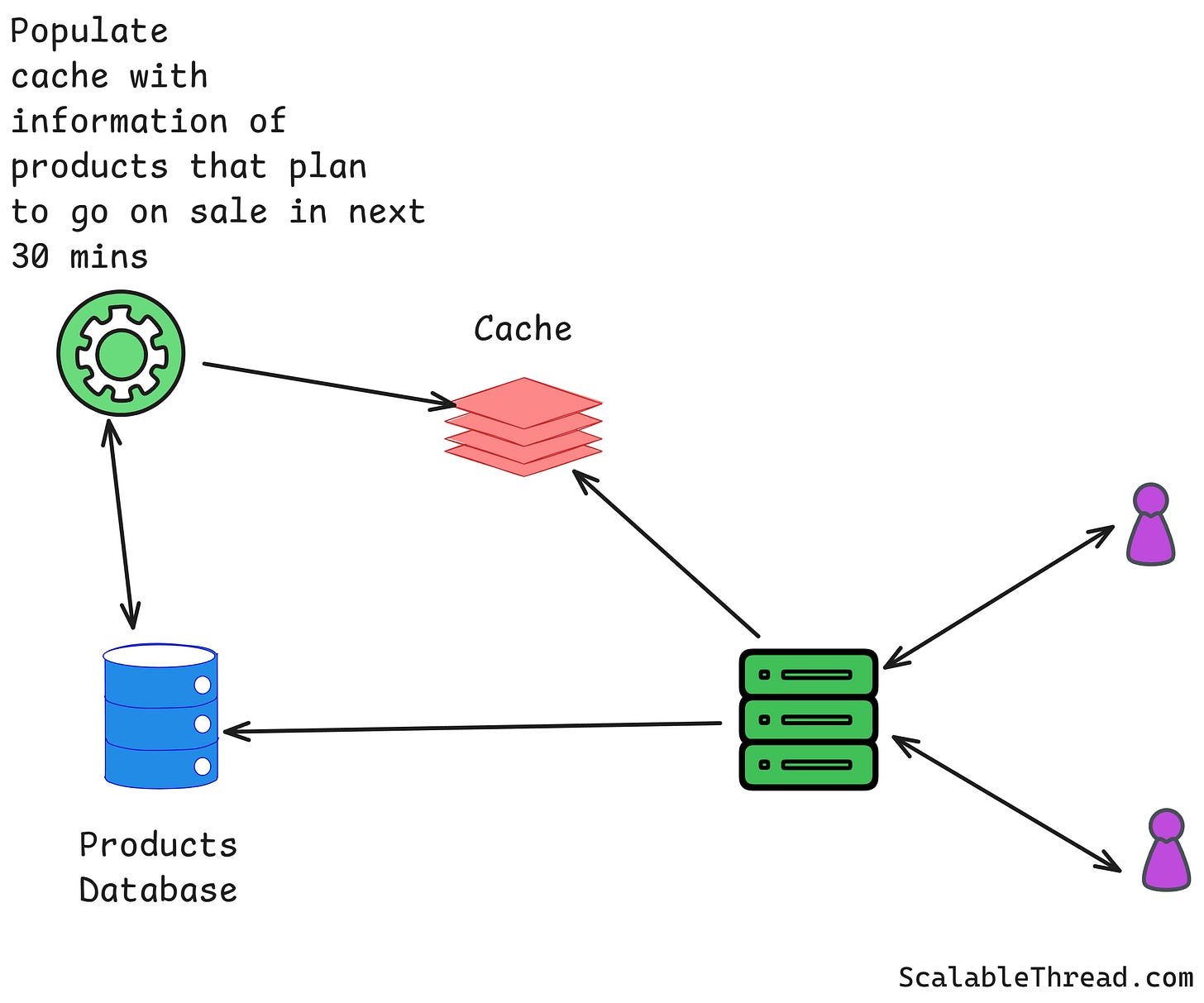

Event-Driven Warming

This dynamic strategy triggers the cache warming process in response to specific events within the system.

For instance, an e-commerce site running a flash sale, warming the cache with relevant content or product data just before the event can prevent the system from crashing under the initial load or if an item in an e-commerce inventory drops below a certain threshold, it might trigger a process to cache related or alternative products, anticipating that users will be looking for substitutes.

Just-in-Time (JIT) Warming

When a cache miss occurs for an item, the system fetches it from the database. However, instead of just caching that single item, it also fetches and caches related items that will likely be requested next.

For example, if a user requests page 1 of a product listing, the system could proactively fetch and cache pages 2 and 3, assuming the user will likely navigate to them.

Cons of Cache Warming

Despite its benefits, cache warming has drawbacks and requires careful implementation.

Increased System Complexity: Implementing cache warming adds another moving part to the system architecture. It requires writing, managing, and monitoring warming scripts or services. This introduces new potential points of failure that must be handled.

Resource Consumption Spikes: Running a cache warming process can be resource-intensive. It can cause a sudden spike in CPU, memory, and network I/O on both the application servers and the backend databases. If not managed carefully, a warming process could negatively impact the performance of the live system. This is often mitigated by running warmers on dedicated instances or throttling the warming process.

Stale Data: A challenge with any caching strategy is data staleness. A cache warmed with a data snapshot can quickly become outdated if the source data changes.

If you enjoyed this article, please hit the ❤️ like button.

If you think someone else will benefit from this, please 🔁 share this post.